Following are excerpts from Steve's Jan. 25 webinar hosted by Gurobi Optimization.

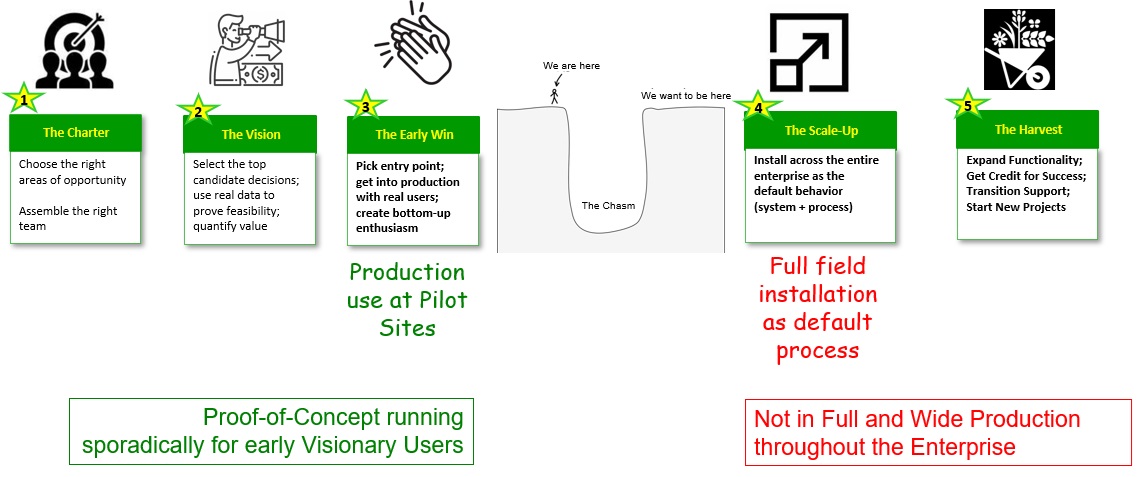

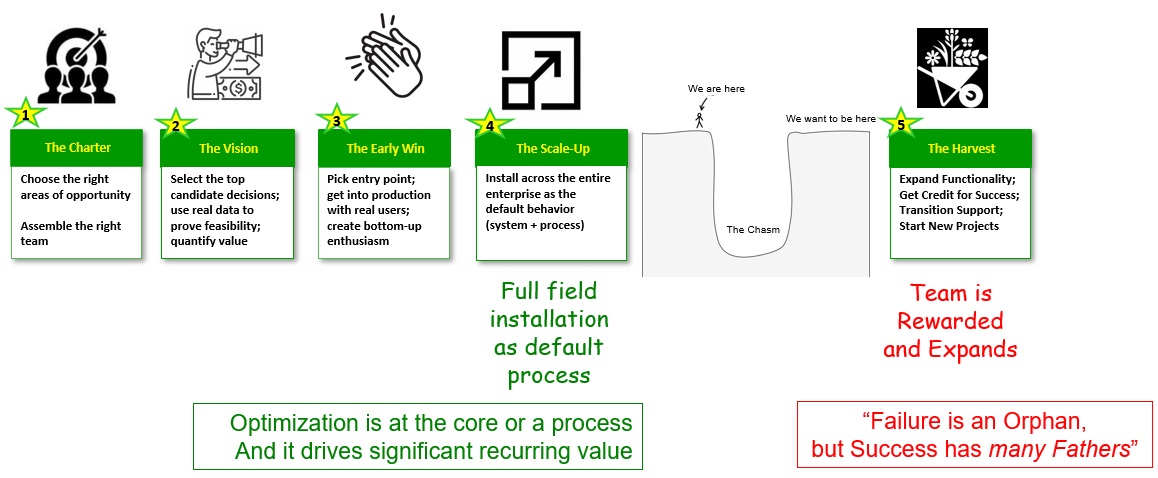

The AI optimization success lifecycle—from the charter to the harvest—represents a wonderful world. However, today we will get away from the “wonderful” and talk about what really happens in the field. Often, colleagues and I see that a chasm forms between the early win and the scale-up. In the early win, a Proof of Concept (PoC) is in production at a pilot site, but the organization has been unable or unwilling to scale it across the enterprise.

This phenomenon is not limited to AI optimization; it is a generic problem in technology. Famously, Geoffrey Moore’s book, “Crossing the Chasm,” addressed how a chasm repeatedly appears between the “early adopters” and the “early majority.”

For example, the iPhone successfully crossed the chasm and eliminated the push button phone: today a high percentage of adults worldwide use the iPhone or a similar device. By contrast, one might argue that electric vehicles are in the process of trying to cross the chasm—they are still in the domain of early adoption and have yet to eliminate fuel-powered automobiles. Very soon, Apple will introduce Vision Pro and hope to sell to more than early adopters who are tech enthusiasts like yours truly (I have pre‑ordered Vision Pro).

Generally, academia is happy with the early win: “Brilliant, you have advanced the state‑of‑the‑art! Here’s your PhD and professorship.” Business, on the other hand, needs to see the scale of the harvest. Since businesses are solving primarily for financial benefit, shouldn’t savings be a sufficient incentive to scale up a solution?

Throughout my career, I’ve seen many very promising projects stuck in pilot use but not scaled at production, even though there are top executives who say, “We’ve got this model that can solve this problem in seconds, and it saves millions.” If you go out in the field, you may well see Excel spreadsheets and people looking at traditional screens, dragging things with the mouse. Why does this chasm exist? Mainly, because early adopters are different from most people.

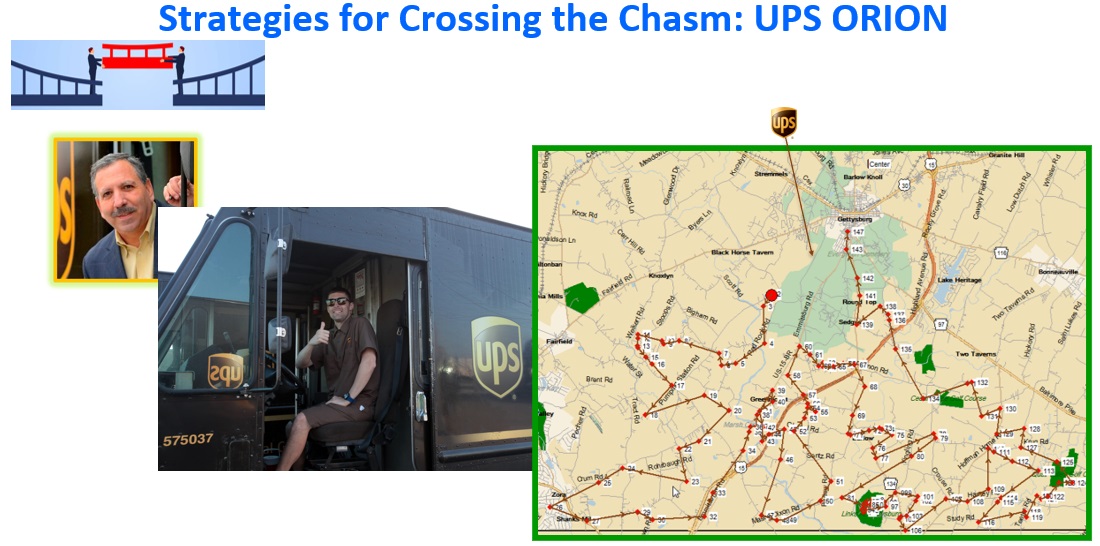

Let’s talk about strategies for crossing this chasm, using a success story featuring a colleague and friend, Jack Levis, and the colossally important ORION Project at UPS, which schedules and routes every package truck in the UPS network. Before ORION, at the beginning of each day every UPS driver was given a list of stops, and it was up to the driver to route himself, based on his experience.

Jack’s charter was to insert AI optimization into that process. The stakes were very high because if you could save one mile for each truck across the entire network, you would save tens of millions of dollars a year. Jack’s group developed ORION that entailed a custom state‑of‑the‑art optimization model that could solve for the entire US. They could prove it worked in cities and suburbs, and that it could significantly improve the major business key performance indicators (KPIs). Despite having done the math, found that they could not progress beyond a few pilot tests into full production. He was facing that chasm. He took on deployment as his mission.

Here are five lessons from Jack’s ultimate success with ORION:

1. Get out of HQ and get to the front line.

Jack and his team went out into the field and showed maps to drivers. They learned that the map datasets they were using were terrific, but worse than what field personnel knew in their heads. Whereas their maps suggested one route, a driver would say, for example, “That's ridiculous because we know at the end of the Walmart parking lot there’s a small private road and you can reach that destination very quicky, without getting on the main road.” Similarly, drivers would say, “Why didn't you just do both stops at the same time as we would have?”

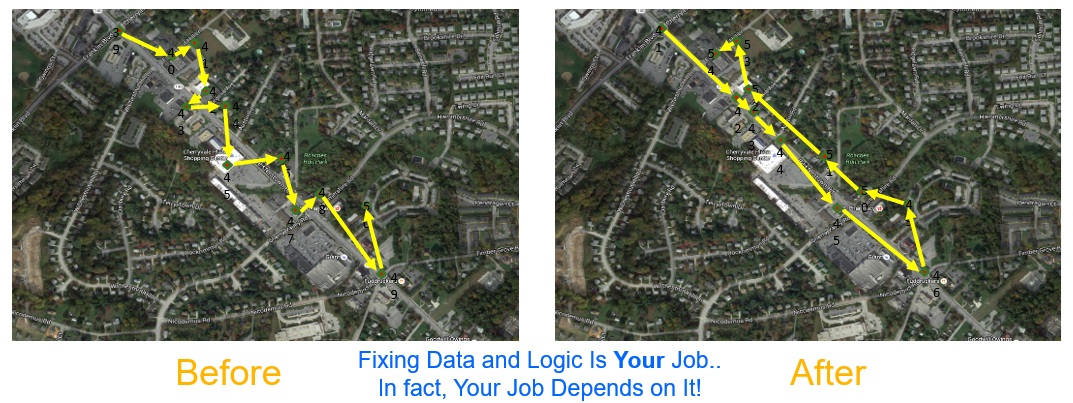

2. Use data science to clean the data and fix the logic.

Practitioners will often say, “Garbage in, garbage out,” referring to bad data and the need for someone else to clean it, but that type of thinking is misguided. You should not be the person who maintains your sole job is to create algorithms. For example, ORION’s original model used the shortest path out to create routes. Drivers pointed out to Jack’s team that there were recommendations that required crossing back and forth across a street or a median, among other errors. The reality was that proceeding down one side of the street, then the other, might look longer and entail more driving miles but was actually much faster and safer. Jack realized his team had to fix both the data and the logic. I’d like to emphasize this for everyone watching today: your job depends on this activity.

3. Focus on behavior change.

After ORION, Jack advised that there is a point when you have to change conversations around the way people do their jobs. If you don’t, you’re just “a flavor of the month” along with other proposed changes so often presented in the corporate world and then forgotten a few weeks later. At UPS, Jack’s team delved deeply into what drivers were trying to accomplish daily. They discussed what would happen when drivers couldn’t deliver all the assigned packages: they would feel bad and have to report overtime, along with other problems. Jack and his colleagues spent time discussing with drivers, “How will ORION help you do your job better and have a better life?” “Can you see yourself using this?” They successfully changed the conversation.

4. Measure impact at each site.

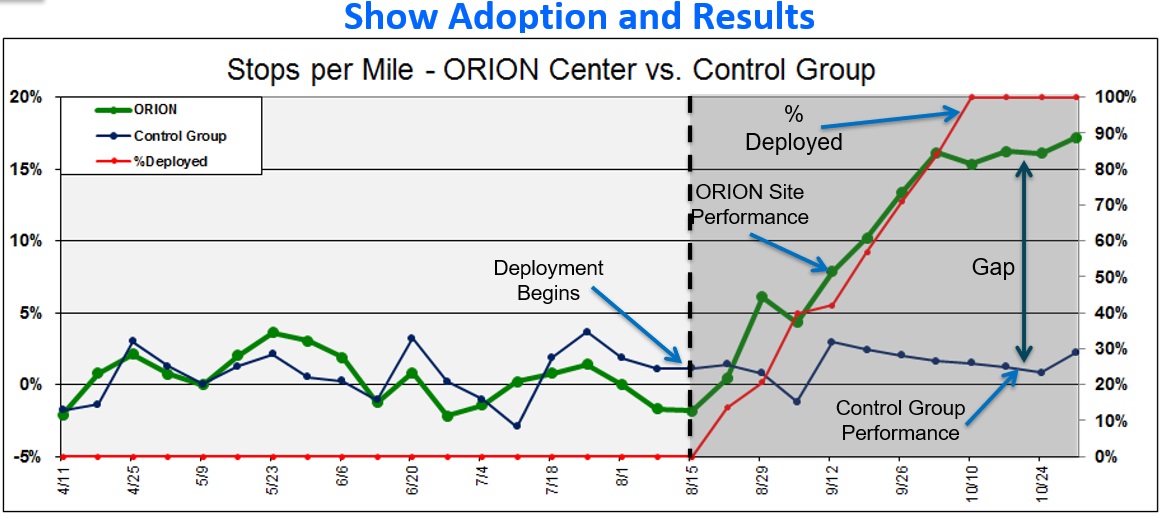

You want to measure real impact in the field. It’s always helpful to use a control group, as in a pharmaceutical study. At UPS, Jack’s group might at times have half of a group of drivers use the new tool, half use the status quo, and then track results as adoption happens and how that improves.

5. Prepare stakeholders for randomness and provide visual tools.

As you are collecting statistics, you have to prepare executives for randomness. In the measurements that Jack’s team used, values went up, which was good, and then went down, and then again. As at UPS, you may have to account for confounding variables like seasonality, competitive forces, and other factors impacting performance. Give stakeholders visual tools that fit how they consume data, so they can evaluate impact. Jack’s group’s guidelines presented smooth, minimum, and maximum values, and demonstrated great improvement overall.

The Second Chasm

Let’s say you’ve scaled up a solution and things look great—does that mean you will avoid the next chasm? “Wait, there’s another chasm?” you ask. Well, you want to get to the harvest, where the team is rewarded and expands into new projects, but it’s not happening.

This chasm exists when a team is effectively “chained” to its successful project, even though the team members want to do new things. The practitioner can resemble an actor who doesn’t want to be typecast but wants to play other roles in other genres: you want colleagues in other areas to call out your successful field implementation and ask for your help.

One reason for this chasm can be described by the axiom, “Failure is an orphan, but success has many fathers.” For example, at UPS, Jack observed that by the third or fourth year so many people were involved with ORION that it could be difficult to remember that his AI optimization group was responsible. A project can become everyone’s win, which is a good thing, but it can prevent a harvest. Fortunately, Jack got his harvest: for UPS he won the 2016 INFORMS Franz Edelman Award, the most prestigious achievement in operations research. In applying for that award, more than $300 million of annual savings was independently verified by the judges. Furthermore, ORION greatly reduced miles driven at UPS, yielding a significant green benefit.

Short of the Edelman award, how can you achieve the harvest your organization? Here are five practices.

1. Create a consistent pipeline

Consistently deliver projects that run from beginning to end and don't get stuck in pilot.

2. Embrace the New Era of Agile AI

Everyone on this webinar understands that AI is an explosive attention-grabber. Keep in mind that executives are looking at the positive and negative aspects of AI. Questions arise: “Can we trust it? What about hallucinations, the mistakes that it’s making?” We practitioners have to figure out how to build AI technology that our users embrace and trust.

What causes AI hallucinations? AI-powered solutions can produce amazing results but often even their creators can’t explain how they get them through their black boxes. This is an issue of many large language models (LLMs), and other models. I strongly believe you should not start with fancy technology under the hood like a new algorithm or LLM. You should start by seeking recommendations that are accurate, clear, fast, and robust.

3. Build Your AI Reputation

I believe you will build your AI reputation on trust—not by the tools you use. When you establish trust, your colleagues will be assured that you or your team will deliver. They request your expert help because they are confident you are going to make it work.

4. The Single Most Important Metric: TTP

Consider the single most important metric for all projects: Time to Payback (TTP). This is the time it takes for a project to break even, after the project launches—taking into account all benefits and costs including labor and software. For your projects, I encourage you to aim for a TTP in less than one year. When securing budget from executives, measuring in time can be so much more powerful than Return on Investment (ROI). When we practitioners create the right project and bring energy and enthusiasm, we can achieve in some cases 10x, even 100x times the investment. TTP makes us impressively quick.

5. Blending the Long and the Short

In the short term, a consistently reliable and short TTP will earn you investors and attract project sponsors. In the long term, you should aspire to be an employer of choice, attracting and retaining superb AI practitioners. Additionally, build partnerships with outside experts for a constant stream of motivation, learning and innovation.

Thank you and I hope these thoughts help you cross the chasms that you encounter.

To discuss advancing optimization with Steve, email us to schedule a call.