Following is a lightly edited excerpt from Steve’s presentation for “New Frontiers of Analytics Practice” at the INFORMS Annual Meeting in November.

Princeton Consultants helps organizations move great insights into AI deployment. Our value proposition is that we deliver high speed and quality, and reduced risk. For the latter, we have developed a series of scoreable risk factors called the “Princeton 20.” For a typical first-generation AI optimization project, we work with management and tag different factors that should be addressed to make the project as successful and as low risk as possible.

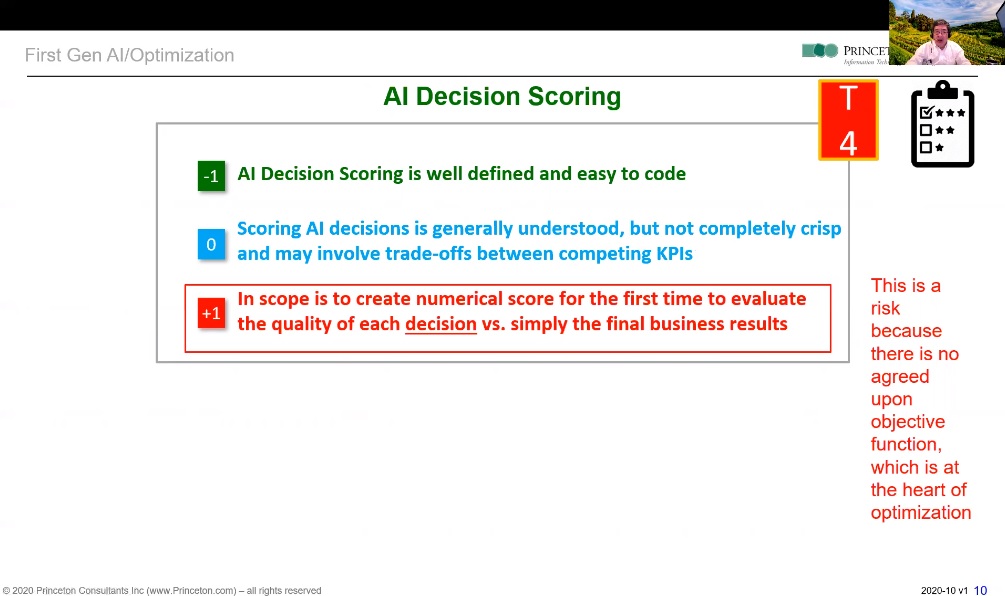

One of the Princeton 20 risk factors is “AI Decision Scoring.” In assessing a project, we ask, “Is there a way of scoring a successful decision?” Client executives might respond, “Actually, there is no current scoring system for making these decisions. We just use rules and people’s intuition.”

When there is not a real consensus around the rules, there is risk. Is the data used to make the decisions completely available in real-time on a computer, or are people using some head knowledge, emails or other things? If the latter, there again is risk.

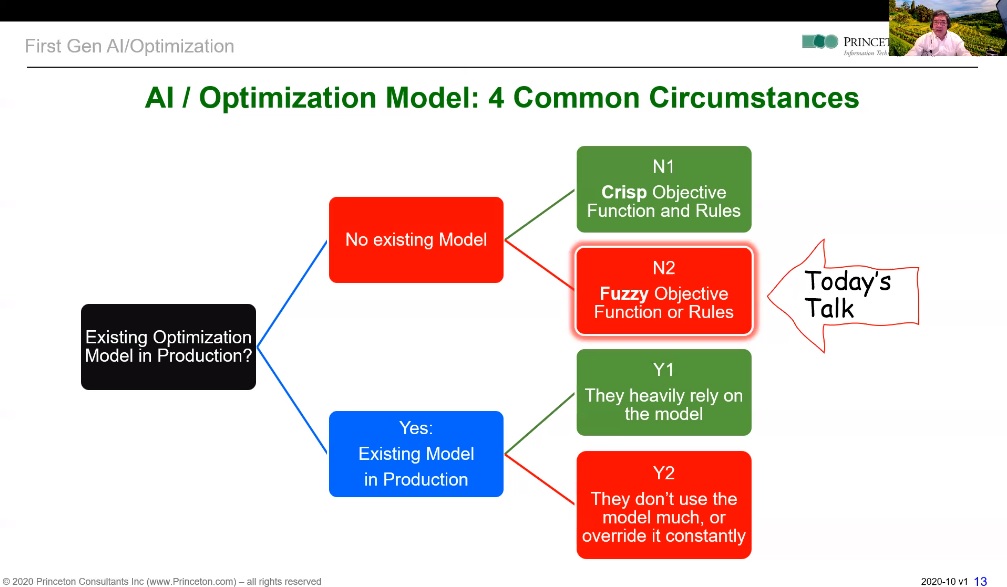

Here is a common set of questions we might ask. Is there an existing AI model of production--yes or no? If yes and we observe it, is the field actually using it? If the field is relying on it very heavily, great! If not, there is risk. If there is a model in production but it is not used much or it is overridden constantly, or if there is no existing model in production, there is greater risk.

In the above slide, in N1 there is a crisp objective function of rules that we understand. More commonly in our firm’s engagements, there is no existing model and there is a fuzzy objective function of rules around the decision-making process. I don’t think you will find many N1 cases, because for a large organization and for important decisions, if a model could have been built easily and quickly, it probably would be there already. It is likely that there is no model because there are challenges and it is a difficult problem.

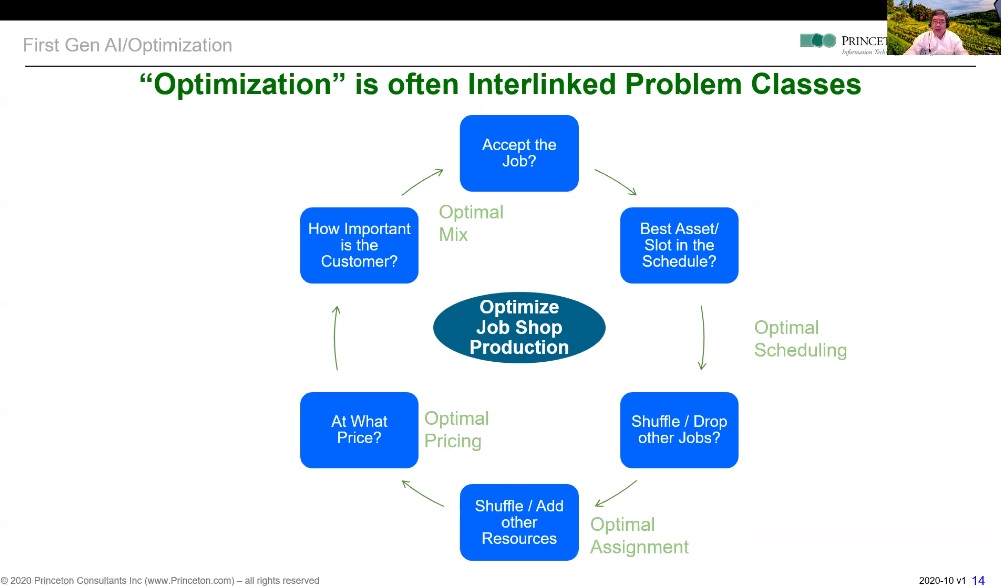

Let’s consider a first-generation problem such as optimized job shop production, Often, it is really a series of models that are working together. First, we want to decompose the problem. We start with one and if we can get it solved, awesome. Then we move on to other adjacent problems or get deeper on the first problem.

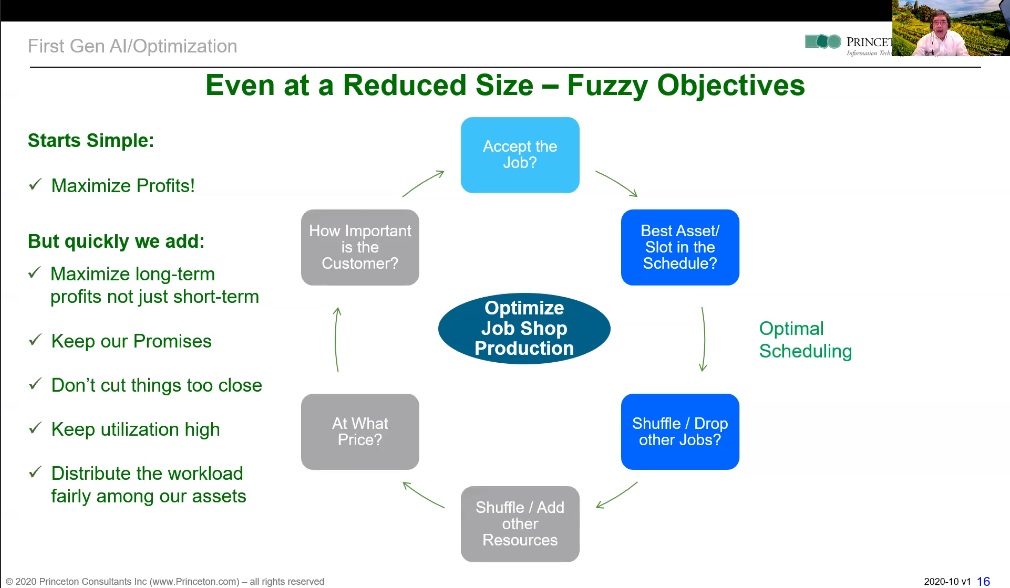

If we say a job is coming in, we want to see if there is a slot for it on the schedule and/or if we should drop or shuffle other jobs to make room for it—so-called optimal scheduling. Sometimes, initially this problem can seem very easy in terms of the objectives: we will simply take the set of jobs which maximizes profits. However, very quickly we will find that the objective function is a little more complex than was first presented. For example, what maximizes the profits in the next 24 hours might not be the same answer that maximizes profits over the next quarter.

If we are presented with a repeating job, we should account for all its different components and instances. We may be presented with a job that appears to be low profit because there is a discount to a large customer that gives us lots of jobs. We are not looking to optimize the next 24 hours—we are trying to optimize our profits over the next quarter or year.

Let’s consider that we like to keep our promises. We are willing to drop jobs off the schedule, but we really hate to do that because we had said yes, and now we hate to call back and say no.

As we are evaluating what constitutes a better work schedule, we do not cut things too close. Just because a job is supposed to run for 45 minutes does not mean we make the next job start right afterward. We add a little bit of padding so if something goes wrong, we avoid a domino effect. On the other hand, we do not want to overly pad, because we want to keep utilization high. Also, particularly when we are undersold, we distribute the workload fairly among our different assets or jobs. We do not want to give all the work to one crew, leaving the other crew with nothing to do.

These are representative challenges when you move from insight to deployment. Initially, a problem can seem fairly easy, but as you get to real-world schedule-building, there are fuzzy objectives that require mitigation.

In our next blog post, Steve will present 10 tactics to manage risks in AI Decision Scoring. For more information, visit our AI Project Risk Management website.