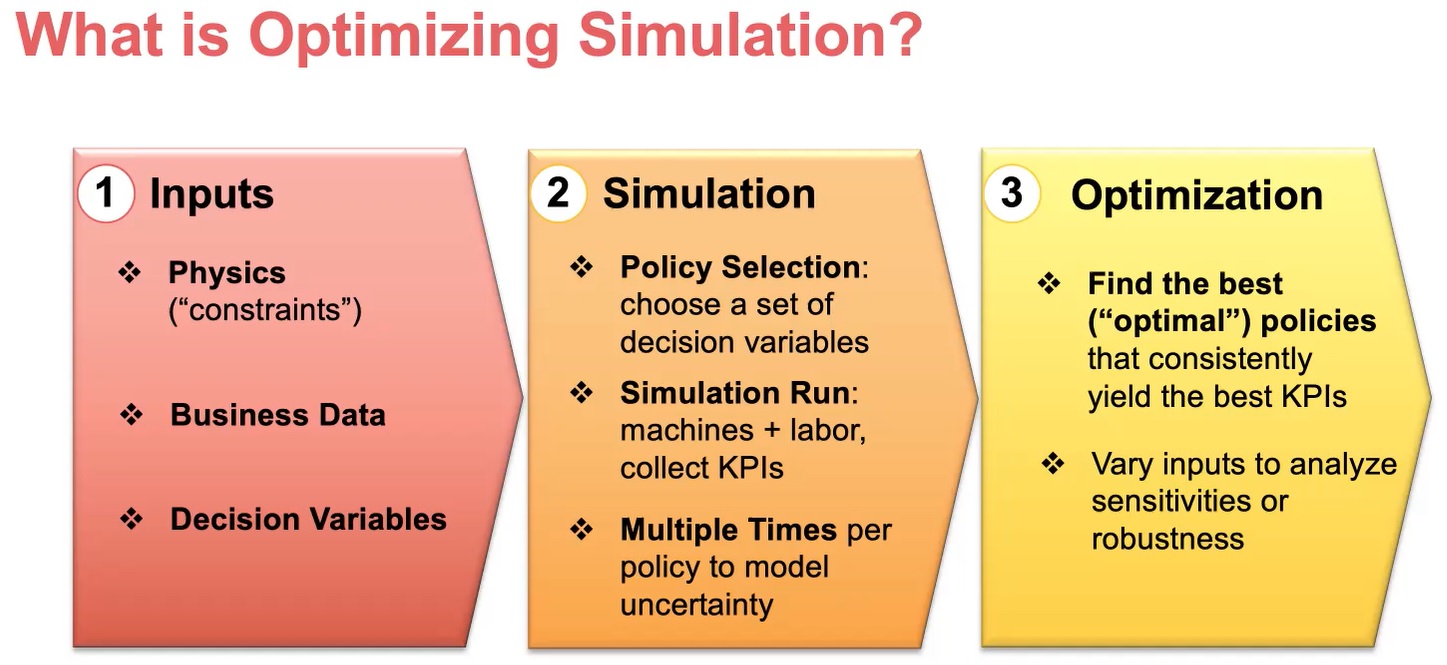

We define optimizing simulation as the application of optimization principles to simulation, taking it from predictive analytics to prescriptive analytics. Optimization is layered on top of inputs and a simulation to determine optimal policies, decisions, and even operating parameters based on key KPIs. This allows us to simulate environments, facilities, and different decisions that people need to make, but also to optimize those scenarios.

Simulation is often telling you what you can expect—it’s a forecast. When we layer on the optimization, we identify the best decision for the scenario. The optimization also enhances sensitivity and robustness analysis.

Princeton Consultants has developed five steps to develop an optimizing simulation rapidly, when there is business urgency and there are just days or weeks to develop the optimizing simulation and help executives make decisions.

Step 1. Perform an iterative design process to achieve the Minimum Viable Product (MVP).

Focus on where you see the value and avoid spending time elsewhere. Define the smallest set of what is needed to succeed—and nothing more. What are the key scenarios? What data and business rules do you want to include? What metrics do you want to track against? This can be challenging, because client executives often want to add a lot of realism and detail that they think makes a better simulation or optimization model. Guard against a false sense of precision—as when a client wants to add decimal points to values for an optimization model’s coefficients, costs, and penalties. When you’re rapidly developing, every little piece you insert costs money and time to develop, test, and validate—and it adds to the complexity and the risk.

A best practice is to identify what metrics to baseline against at the very beginning, to ensure they are being captured. You don’t want to learn in the middle or worse, toward the end of a project, that the simulation is calculating metrics that require comparison to the “real” metrics that may not even exist, and that weeks or months are needed to obtain them.

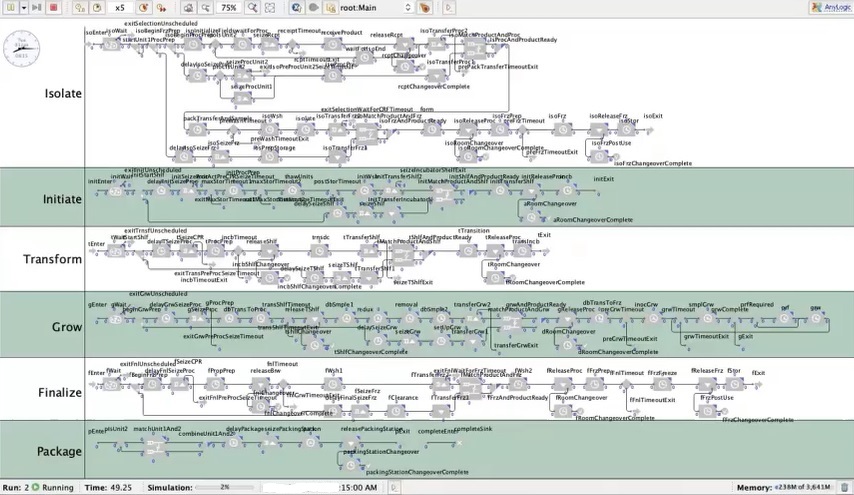

Another tip is to avoid an unnecessary level of detail. At a biotech manufacturer client engaged in trailblazing personalized gene therapy, some of the executives prided themselves on the medicine and biology, and initially wanted scientific completion reflected in the models. In this case (see graphic below), the simulation was concerned with product flow through machines, deciding who gets what resource when. Adding more detail didn’t provide more value as aggregating a lot of the steps together had zero impact on the results we were modeling. The cost of the realism did not add up to real analytical value.

Step 2. Adhere to core development principles for optimizing simulation.

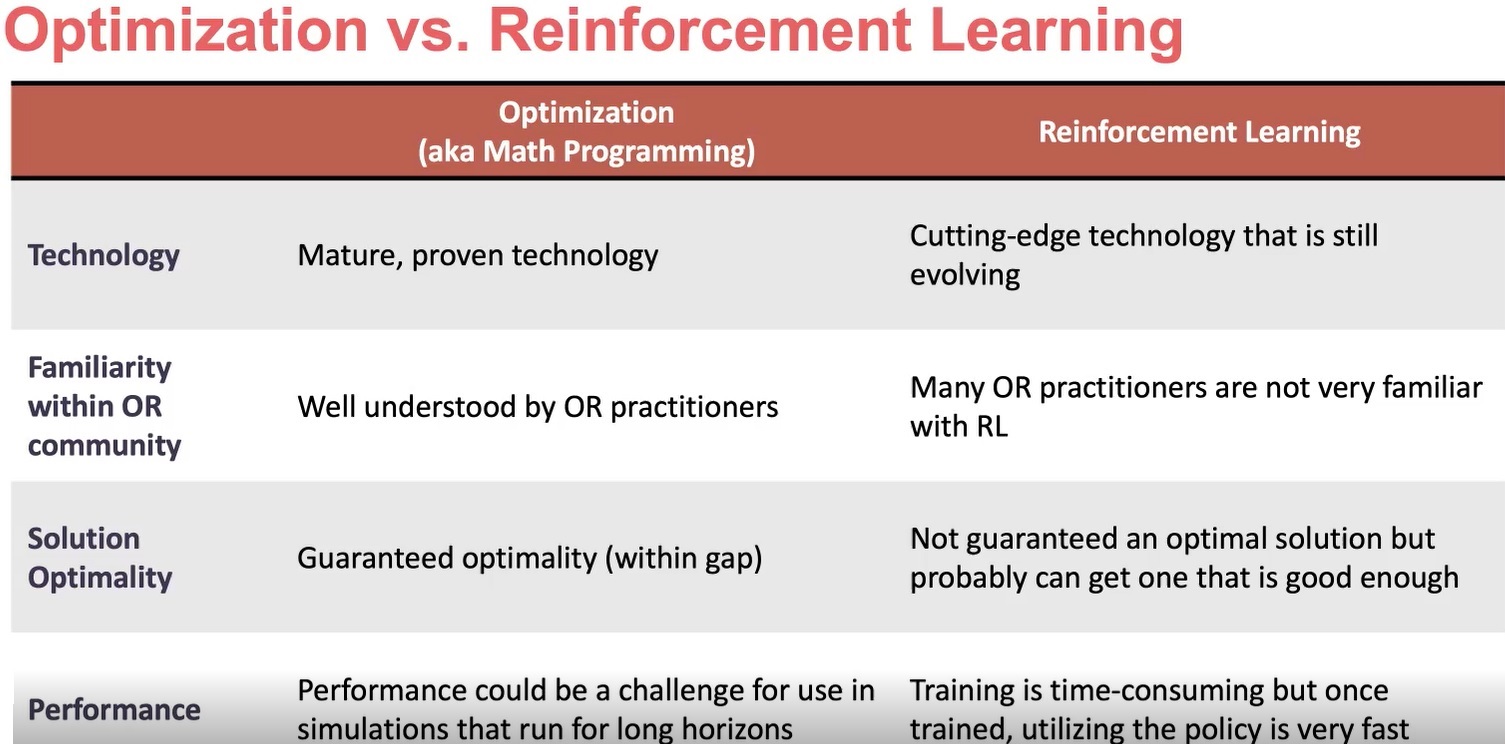

First, choose your simulation approach. Discrete event simulation is classic. For agent‑based simulation, we use AnyLogic because of its flexible approach. INFORMS routinely provides reviews of the various simulation packages which is a great resource when choosing a simulation package. Consider the benefits and limitations of your optimization approach. Traditionally, by “optimization,” we meant math programming--MIP or LP. Nowadays at Princeton Consultants we see optimization as more of an umbrella category. We see the value of machine learning, deep learning, and reinforcement learning. We are working with Pathmind, a package that goes with AnyLogic, to explore cases where we can perform optimization with reinforcement learning and get similar results of what we would see with MIP. Alternatives to MIP can be helpful in a simulation that is running many times and performance is critical. It can also be worthwhile to explore metaheuristics, genetic algorithms, tabu search, particle swarm, and ant colony.

When to use agent-based simulation? If the entities can be naturally represented as agents, that’s one positive indicator, especially if they’re interacting. For example, we have done simulations of a rail terminal, where there are hostler drivers, ,trucks, and other workers moving around in a GIS space with spatial awareness of each other. What one worker does affects another’s work in terms of order and dependency. If the agents need to either learn or adapt their behavior, that’s another sign to use agent-based simulation. Pandemic modeling is a key use case for agent‑based simulation. For a beverage manufacturer examining COVID-19 policies at a plant, we modeled workers, some of them contagious, possibly spreading the virus as they walked around, not as they sat stationary.

Step 3. Establish a methodology for successfully baselining.

If your simulation cannot accurately model the as‑is state, your users are not going to have any confidence in what you say about the future state and policy modeling. Baselining is standard in simulation and there is a lot of literature on the basic steps but sometimes you run into challenges with the traditional approach. One challenge we encountered when baselining the startup biotech manufacturer was that that there was not an existing process, as they were in clinical trials. How do you baseline against another state that does not exist? Start small, always iterating, and layer on the behavior, so it is easier for the users to validate. Create a test suite of inputs, outputs, volumes, and expected results. It is not common to see a set of test cases with a simulation or an optimization model, but it can be beneficial to see how results change over time. Have your subject‑matter experts dialed into the process, so they can review results, too.

Step 4. Employ an iterative review cycle that helps reach consensus.

Start with a quick, initial iteration to get SMEs on board with the vision. It can be scary to use an Agile methodology for an optimization model because you don't have all the features in the initial version but proceed with a partial simulation even if it is not nearly complete. Start with an initial set, show it to the users, get their feedback, and start baselining. Then come back and add more constraints, more functionality. Users will identify missing KPIs and if tuning is needed. Frequently check in with the team, ensuring everyone is on board and tracking changes as well as what is upcoming so there is agreement about scope. It is critical to attain sufficient detail and functionality and then stop—and start looking at analysis.

Be upfront with SMEs because you need them to be plugged in. Strong leadership is key to do this quickly and you need to understand your priorities. Feature prioritization is a hallmark of Agile where you have your backlog that has been prioritized—you know what to work on, what your success criteria are. Often, business users who are very knowledgeable about a system see lots of things they want to change. During rapid development, simulators are digital twins only to the point that you need to achieve the desired results. Once you have all the key aspects and everyone agrees, you can start running many scenarios, conducting robustness analysis, sensitivity analysis, and optimization, and analyzing the results.

Step 5. Follow best practices in validation to facilitate business process changes.

Simulation is effective for validation because it speaks business executives’ language. They can see the simulation looks like their facility and their process, and the numbers look like their operational metrics. Conversely, pure optimization offers equations and a formulation document that business executives won’t read. If you vary the inputs, you can get a deeper understanding of sensitivity robustness in a way that business users can understand. An Agile iterative approach helps them feel engaged and heard throughout the process. An optimizing simulation is meant to generate unexpected results, which can evoke resistance to change. The key is to bring the business users along through every step.

If you find resistance, quell the instinct to gloss over it. Meet resistance head on. Bring in the skeptical executives and help them get on board, and ultimately they will be your biggest supporter when it comes to implementing the changes.

Visualization

Simulation packages come with great visualizations, which entail a bit of eye candy. The visualizations are good for early validation (e.g. you can see if products are not flowing correctly) and for debugging (e.g. if there is a bottleneck in the system and things start backing up, you want to address how it got to that point). These visualizations are not a replacement for full‑scale analysis or rigorous baselining, so don’t overuse them.

Final Thoughts

The more you can iterate the better: getting feedback and turning around changes quickly is key. Although involving decision makers early and often may seem to slow down the process, ultimately you will not be waiting until the end of the project for them to ask for a lot of changes that necessitates a rewrite. Use an Agile approach to help you prioritize and implement requirements. Find an existing tool or framework that meets your needs and will accelerate your development—you don’t have time to reinvent the wheel.

To learn more about optimizing simulation, email us to set up a call with Patricia.