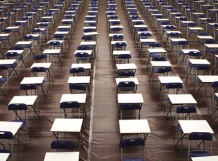

A few years ago, I served on an INFORMS committee responsible for reviewing the questions that appear on the INFORMS Certified Analytics Practitioner exam. An interesting challenge for INFORMS is to take an item bank of questions, and choose a subset of the questions to appear on the exam that meet certain conditions related to the balance of topics and difficulties of the questions. This challenge doesn't just exist for INFORMS; it also occurs in many industries that use exam procedures to certify specialists. It even occurs for the SAT used in the college admissions process.

The Optimization Edge

A Blog for Business Executives and Advanced Analytics Practitioners

Technologies: Data Science, Big Data, Optimization, Machine Learning, Artificial Intelligence, Predictive Analytics, Forecasting

Applications: Operations, Supply Chain, Finance, Health Care, Workforce, Sales and Marketing

For time-sensitive Big Data research, renting processing capacity is often better than buying and maintaining farms of dedicated computers, and it helps organizations achieve tremendous leaps in productivity and ease of use.

Leaders of a High Frequency Trading (HFT) hedge fund retained Princeton Consultants to create a state-of-the-art environment for researching and simulating their alpha and execution ideas. In the typical cycle, proposed research ideas are discussed, approved, coded, and then back-tested against prior market and related data, using different combinations of parameters. In this never-ending quest, HFT funds face several classic challenges:

More Operations Research professors and students are building and deploying applications than they were two years ago, aligning them more with advanced analytics practitioners, according to our survey at the INFORMS Annual Meeting October 22-25 in Houston. The survey repeated with minor modifications our survey in 2015 at the same event, which found notable gaps between what students learn, what professors teach, and what practitioners need.

We can report the following:

- Gaps between practitioners and academia narrowed related to programming and application development, with more than 50% of students surveyed building applications.

- The O.R. community is very flexible when solving problems; there is no dominant software product or development tool.

- MATLAB is popular as a solver and as a programming language, and as an optimization tool, it is used more in academia than in industry.

- The use of modeling languages is especially varied.

We are proud that our colleague Irv Lustig received the INFORMS 2017 Volunteer Service Award for Distinguished Service. Irv has recently helped develop and improve programs and outreach to businesses and professional practitioners, a critical initiative for INFORMS. Irv points out how, since he began his career 30 years ago, the landscape for Operations Research has transformed on both the technology side and the human side.

Data availability is obviously much greater than it was before the Internet, but more exciting to Irv is the evolution of the associated tools to explore and understand data, making it easier to then choose the right data for an analytics application. Improvements in the performance of optimization software algorithms and implementations have far exceeded the improvements in hardware performance, making it possible to solve ever larger and more complex problems.

Photo - The Wagner Prize presentation team: (left to right) Ted Gifford, Daniel Vanden Brink, Tracy Opicka, Robert Randall and Ashesh Sinha.

I was proud to be part of a recent project that delivered significant, quantifiable economic savings as well as improvements in productivity and service. The project duration and Time to Payback (TTP) were rapid: less than four months elapsed from kick-off to initial solution deployment. In recognition of the operations research work and underlying mathematics, Princeton Consultants joined our client, Schneider, www.schneider.com, a leading transportation company, as finalists for the 2017 INFORMS Daniel H. Wagner Prize.

Princeton Consultants has implemented software systems embedding mathematical optimization for many years. We recently documented our methodology to verify that the implementation is satisfactory. We term this the Princeton Optimization Implementation Verification Methodology (POIVM). Through this cyclical 4-phase methodology, diagrammed below, we verify the robustness and reliability of optimization-based software systems, while enabling ongoing improvements to the underlying models:

Optimization is a powerful approach to driving up the value yield from a scarce and expensive asset. Space is another asset that can be as scarce as equipment and no less contentious: shelf space, space in shipping containers and freight cars, seats in auditoriums and arenas, and space in magazines and newspapers. Take ad space. It is a precious and limited asset, both for businesses needing to reach a target audience and for those who own the media outlet. Allocating ad space in a publication also represents a decision that has to be made over again each time a new edition hits the street, making it a prime candidate for optimization.

For successful implementation, it is critical to understand how data feeds the optimization system.

There are two sources of data. The first is automatic feeds. I have been involved in building systems where one database is feeding another database, which is feeding another database, and eventually it feeds the model that you have. What happens if somebody changes that middle database? Suddenly, some of those assumptions can change and the data doesn't look the same or doesn't satisfy the same characteristics.

Optimizers look for opportunities, even as resources become more constrained. Rather than asking, “How can we reduce costs?” optimizers attack a business challenge by taking a long, hard look at each step of an operation and asking, “What is the best way of doing this? How can we add value by getting more from the assets that are available to us?” In other words, “How can we optimize?” Instead of looking to “take away” or impose limits, optimizers look for ways to open up infinite opportunities.

An excellent example of how this shift in mind-set works can be found in a company’s call center. When it comes to customer-service calls, the command from management is typically, “Cut call-center costs.” But an optimizer, looking at call-center operations, would suggest a very different mandate. To a quant, the objective becomes, “Optimize the way that customer-service calls are handled.”

As another school year begins, I thought it worth revisiting the snapshot survey Princeton Consultants conducted at the INFORMS Annual Meeting in Philadelphia in 2015. We asked advanced analytics professors, students and practitioners about tools and approaches. The survey was designed to compare the responses of these three groups about optimization solvers and modeling languages, programming languages, and software development.

The key findings in 2015 were as follows:

• Students must learn more about building applications with modern technologies so they have the skills needed by the practice community. Practitioners valued: building web server-based applications; building applications to be deployed on the cloud; building browser-based applications; and building console-based applications.